V/A/M/XR and the body as the realm of synthetic experience

Portrayals of VR have always seemed to contain a promise of a very near future, where everything is possible in a world made of digital information. From the neural trip and cogninition-enhancing hyperdrive technology portrayed in The Lawnmower Man, with legendary bold statements like "VR holds the key to the evolution of the human mind".

To a digital metaverse, where even childhood memories can be hacked with VR. Where the good guys and bad guys, fight it out in a world made of data.

VR has been around the corner as the promised future since the 80s at least, and humans have projected their desires and fears on VR as the ultimate tech, the one that will liberate us from physical reality and bring us to a new epoch of unlimitted potential. At least that was the promise.

I tried VR in 1992 as a child when my grandfather took me to a fair and I have been consistently disappointed (and excited) by VR ever since then. VR in the 90s looked like this:

Since then it has been a specialized medium that required of very expensive equipment that was only accessible to research centers, universities and the military or very specific showcases.

It wasn't until 2012 with the Oculus Rift that VR became a bit more accessible to general audiences. Released in 2012, the Rift was the first headset that really seemed to fulfil the promise of a consumer-grade device that was good enough to do VR. However, and despite heavy investments from major tech companies in the years around 2015 it has so far failed to go beyond a niche and become the mass consumer medium that was predicated in the mid 2010s.

Look at this video form Microsoft, from 2016 and observe the scenarios that this promo proposes:

A magic leap "demo" from around the same time:

In the world of virtual augmentation it seems like technology has so far failed to live up to the sales pitches. Although the imaginaries in these videos have not come to reality in full yet, VR and related technologies continue to have an important role in the creation of immersive content. The field is diverse enough and has proven effective in certain applications. So even though VR is not a mass medium, it will continue to exist and therefore it is important to understand its potential.

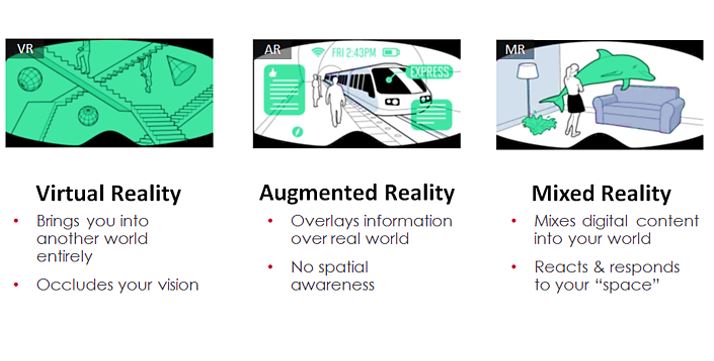

VR, AR, MR and XR a glossary

In VR you are immersed in a completely virtual world while the physical world around you is blocked from view by the screens in front of your eyes.

In AR a digital overlay is placed on top of a video feed overlapping it. Virtual object from the digital layer do not directly interact visually with the camera feed. It works as a kind of real-time green screen.

In MR there is no video feed, but the visor or headset is see-through and allows you to see the physical world around you, while at the same time it is scanned directly into a digital model of the physical world, where the digital artifacts can interact with the physical world, doing things such as occlusion, etc.

See engineers from Magic Leap show this interaction between physical and digital:

The term XR is used to refer to all three of the above indistinctively. Typically, people that develop content for one of these technologies are familiar with the others.

To add to the confusion, it seems that there are uses of AR that do not involve the scanning techniques used in MR but that behave and look like AR. See here:

I am afraid that this will make your Pokemons much harder to catch.

The use of Machine Learning in VR/AR is expanding the visual possibilities in ways that didn't seem possible just a few years ago. Given the pace of development and the introduction of ML techniques to the field it doesn't seem likely that distinctions between AR and MR will continue to mean the same thing in future systems. So hold these terms lightly because they might converge in the near future.

XR is not just one technology, but a constantly evolving assembly of different technologies that facilitate immersive experiences, it becomes a little easier to decipher the distinctions between experiences, headsets and to understand that potentials of the medium when we know a little more about some of the underlaying technologies.

Spatial Computing

Is a modality of computing in which the interface is aware of its surroudings and takes that into account in the process of presenting information to the user. As a general rule, any time you need to know the location, size, shape or orientation of something, you are using spatial computing.

For example by using a smartphone's GPS location and the IMU sensor, the interface has enough information to know where the phone is located and in which direction it is pointed.

In 2009 Google released SkyMaps, early versions of Skymaps were technically speaking not exactly AR but they used principles of spatial computing to show users a starmap that was relevant to their location.

In 2013 Niantic Labs (a Google spin-off) launched Ingress, a game that would take some of these ideas on spatial computing and combine them with Google's mapping products and advertising channels and turn them into a viable gaming platform. Ingress was the first game of its kind and it innovated in that it encouraged people to get out of their usual gaming rooms and out in the streets, to play with others in an enlarged virtual community. It was a kind of role playing game.

Niantic labs further improved this combination of technologies to bring to the world Pokemon GO, which I hope I do not have to explain. Ingress was rather successful but Pokemon GO was in a category all of its own, a social phenomenon rather than a game. This is when Snorlax appeared in Beitu district in Taipei.

Stereo vision

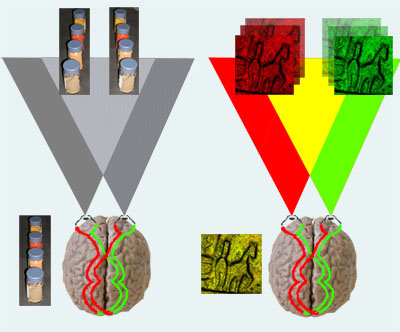

Part of the experience of feeling immersed in a scene comes form the fact that your entire visual field is presented with a stereographic image that represents a visual stimulus. The way to achieve the sensation of depth is by providing a stimulus that matches your visual system. This principle is known for many years already, and stereoviewers were a novelty item as old as photography.

If you are a human with normal vision, you very likely have two eyes. Each of these eyes occupies a different position in your head, they are separated by what is called interocular distance. A specific separation between each eye that is different in every person and averages around 6.5cm. One eye receives a slightly different visual stimulus than the other and when your brain reconstructs the image in your visual cortex, it uses the differences between these two images to create a sensation of depth and an awareness of space.

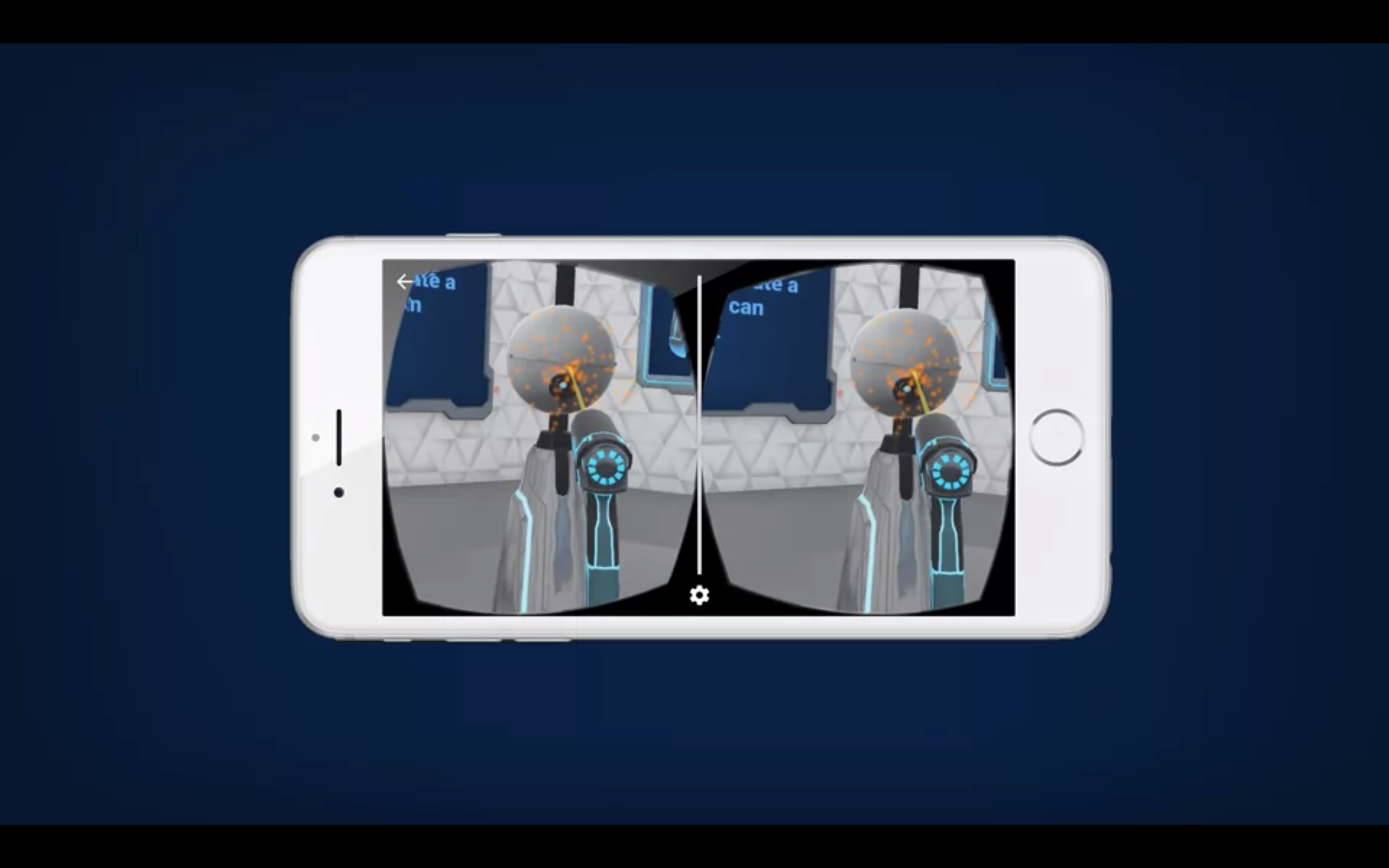

To simulate how visual stimuli work in your biological vision system, a computer produces two images from two different virtual perspectives at slight distance from each other and presents them in one screen for each eye. All immersive headsets implement some form of stereo vision.

This is also why stereovision and therefore VR is so computationally expensive, as all images have to be rendered twice every frame.

The case of Google Cardboard

Google Cardboard is perhaps the most significant and elegant hack that helped boost the current wave of VR. Released in 2014 is was a simple and revolutionary idea. All you needed was a smartphone and a piece of cardboard with cheap plastic lenses that allowed you to hold the smartphone right in front of your eyes and split the view of the screen. It was (and still is) the cheapest VR headset in the market, mostly because it makes use of a crucial insight: that all the tech necesary for successful spatial computing and stereo vision is already packed into your smartphone. Namely:

- The IMU inside of the smartphone allowed cardboard to do very basic head tracking.

- The fast smartphone processor allowed Cardboard to process head tracking information and render content in a way that was consistent with the head's motion.

- A built-in GPU in your smartphone allows Cardboard to run shaders that are necessary to perform barrel distortion and other optical corrections on the image so that it appears correct to your eye.

- The video processing power of your smartphone and its network connectivity allowed it to stream 360 video content.

Interactivity in Cardboard is fairly limited as the only other user input available is a single button controller. Cardboard doesn't do hand tracking either so there's no representation of the user's body in VR possible, making the user experience very limited. Cardboard is mostly used for visualization in sitting experiences.

Head tracking

Head tracking is used to determine the user's gazing direction and head position in any XR interface. Head tracking is the most fundamental technology necessary for VR, as it is what allows the subjective view point that characterizes these experiences. Without head tracking, there's no VR. Some types of AR do not need head-tracking though, as the perspective is basically third-person instead of subjective first-person view.

![]()

In early VR systems head tracking was a very expensive thing to do, requiring very expensive sensors or customized rooms to carry out VR. With cheap IMUs being part of every smartphone, this crucial piece of the puzzle for VR became consumer-grade hardware and cheap enough that it was possible to build inexpensive headsets.

Cardboard is good enough to create the illusion of VR, but the tracking mechanisms and cheap sensors make it a fairly primitive user experience compared to higher end headsets.

360 video

The appearance of cheap VR headsets also pushed 360 video camcorders down in price and size. Many content creators considered these two technologies to go hand in hand.

Dutch National Ballet presents Night Fall, the world's first ballet created for Virtual Reality.

Audio spatialization

The illusion of being in a virtual space wouldn't be successful if we only focused on the visual aspect. Human cognition depends to a very large extent on sound cues to understand aspects of the spaces we inhabit. Large spaces with hard reflective surfaces for example will produce echoes. Sound is crucial to produce an accurate user experience of a space and it also helps contruct immersive scenes by prodiving us with cues about the space that are not directly visible, such as what's behind us, below us or above us in a virtual world.

Most of the game engines used in VR support sound spatialization and you can use these tools to design sound scapes for your VR scenes.

Hand tracking

At the time of this writing the only body part that can be somewhat successfully incorporated today in VR, apart from the head, which is a precondition for subjetive perspective, are the hands. Using specialized controllers that are tracked using cameras. These controllers contain various sensors that can be used in the design of VR interfaces. Both the HTC Vive and the Oculus Touch are trackable controllers that give your hands a basic presence in the VR world. The Oculus Touch is slightly more advanced, allowing you to do basic finger tracking as well.

The original HTC Vive couldn't do finger tracking, the hand presence was comparable to a rigid stick, in fact the controller is called wand. Here we see the wand used as a brush. Observe that the brush has no understanding of finger manipulation, it works as an extension of arm and wrist motion.

Hand object boundary

Finger tracking

The human hand is a complex instrument that allows for very fine manipulation of objects, which in VR isn't really posible as most interfaces lack the nuance provided by fully articulated fingers. The latest generation of VR headsets, such as the HTC Quest can do fairly detailed finger tracking. This makes it possible to more accurately simulate the human grasp and give additional realism to the body's presence in VR.

Recent work by @pushmatrix (Daniel Beauchamp). Pushmatrix has been doing a lot of interesting experimental work with hand and finger tracking in VR.

Can be downloaded here.

Locomotion solution using finger tracking. Link to tweet

Haptic feedback

We use the word haptic when we mean to say that something can be sensed through touch, normally through our skin. The most common kind of haptic feedback used in VR is vibrohaptics, in which a vibrating component within the VR controller shakes with different levels of strength to signal something to the user. You will have experienced vibrohaptics in your smartphone vibration notifications.

Apart from the basic haptic capabilities of the controllers, there are some companies that are pushing haptic feedback technologies further for use in games, etc. There are for example haptic vests and full-body suits for VR, that some games can use to provide a greater range of haptic stimuli. See website of B Haptics or KOR-FX vests.

Machine Vision

The Cardboard system has no notion of your body being present in the experience. The experience of Cardboard is one similar to a disembodied head in a virtual space that exists only centered around your head. Most Cardboard experiences can be done sitting as it makes no difference as far as the interaction is concerned if you are sitting or standing.

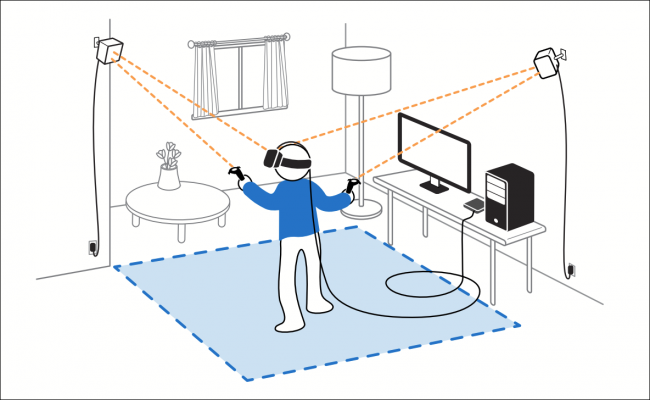

To provide a more closely corresponding experience between body movement in space and the virtual space it is necessary to introduce cameras that track you in space. These are the so-called sensors in room-scale VR systems like the Oculus or Vive. These sensors are essentially cameras that scan the space, your body in it an the position of the controllers and they are crucial in your experience of space in VR.

The need for this sensors give VR a substantial limitation in that a VR setup isn't very portable at all. It is common for room scale VR to have a physical room in your working space to which your system is calibrated.

The latest generation of headsets (Oculus Quest and Vive Pro) have these sensors built into the headset, which theoretically removes the need to have a dedicated room and makes the system more portable and removes the need for room calibration.

VR introduces some concerns as an interactive system

- Motion sickness: Performance is crucial in VR, if the work performs badly it can introduce motion sickness in the user which in some cases can lead to vertigo, loss of balance, confusion, etc.

- Situational awareness: Room-scale VR blocks the user's perception of the physical space they are in, so they might move their arms around violently, or move beyond the range of the cables (for wired headsets) or bump into room furniture.

- "The Rat's Tail"

- Infinite Walking

- Depth perception

- Consent in VR (abuse has been recorded in most social VR experiences)

- Transition from VR to RL (e.g. not a good idea to drive right after VR)

Guidelines for immersive VR content

This is an excellent guide by Intel on basic principles to create immersive VR experiences. Read it.

Uses of VR

Previz (pre-visualization)

Architectural, Project pitching, Brands (Audi and IKEA)

IKEA AR Place app

IKEA AR Place app corporate promo

IKEA PLACE AR app easter egg

Other bodies

Ali Eslami SnowVR, album release with Ash Koohsha.

The Machine to be Another

Education

Anatomy, Physiology

"VR for Good"

VR has been touted as the ultimate empathy machine. The phrase comes from early cinema theorists that attributed cinema the capability to allow us to better understand how others feel. Some VR marketing has adopted this term to describe the capability of this medium to immerse the user in an experience.

Film maker Gabo Arora has worked on several award winning VR documentaries. This is the trailer of Zikr an immersive documentary about Sufi rituals, filmed using 360 video and Depthkit.

Zikr A Sufi Revival - An Interactive Social VR Experience - Trailer from Barry Pousman on Vimeo.

The United Nations has a VR story telling initiative called UNVR that serves as a platform for these kinds of immersive narratives to help in the understanding of pressing issues among decission makers.

Another example of immersive story telling specifically thought as empathetic is "4 Feet: Blind Date" is an award winning 260 film, best explained by its authors in this video.

This virtual-reality project transforms you into a rainforest tree. With your arms as branches and your body as the trunk, you’ll experience the tree’s growth from a seedling into its fullest form and witness its fate firsthand.

Cinema & Interactive story-telling

Cinema, documentary and media festivals like Sundance, SXSW, IDFA all have taken up VR in the last years as a story-telling medium.

Military training

VR venue experiences

There are some VR experiences where the venue plays a crucial role in making the experience immersive. Most of these venues are populated by props, furniture and architectural features that are digitally enhanced and add a level of realism as your body really interactly with physically existing objects. When your eyes can't see the real world, small tweaks in the physical world that are digitally augmented can have a significant impact in the realism of the experience.

Perhaps the most famous in this kind of experiences is The VOID.

As a business they function a bit like lasertag halls or scape rooms, where you have to make a booking with a group of people and visit a spacific location. Some of these experiences tour as if they were operas or theatre plays.

Social VR (Shared VR)

Interesting Open Source projects

AR

VR as a place that you visit

Milam Wisp - HOUR

Ali Eslami - False Mirror

AR Light Estimation

Virtual assets in AR do not visually merge with their environment very well, they have an unconving look that seems clearly pasted on top of a video feed as an overlay. To provide a more realistic integration of digital assets into an AR scene it is important that the lightning of the digital assets resembles the lightning in the physical world as closely as possible. Many of the most recent AR toolkits can do that through light estimation.

Apple's ARKit

By Zach Lieberman:

Weird Type by Zach Lieberman:

Android's ARCore

Mapbox

FOVE headset

Tools

Yield to the rabbit 🐰

- Head tracking using WebRTC for head-coupled perspective HCP.

- Video on Head-Coupled Perspective used in the i3D app

- 360 roller coasters on youtube

- 360 frisbee ride

- Youtube VR channel

- XR glossary

- ABC VR report from 1991

- Talk by Be Another Lab

- Cabbi.bo's website is awesome

- Some of Daniel Beauchamps experiments with direct manipulation

- LEAP research: Exploring the hand object boundary

- On consent Together VR weirdest dev test video